Two Simple Tricks For Professional Results.

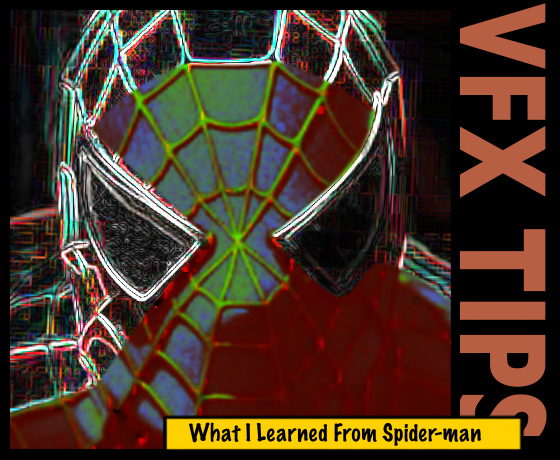

After the 1995 film Jumanji, checking the color of composited elements in a Visual effects scene was all about gamma blasting, and adjusting black levels. That rule-of-thumb changed during the 2002 Spider-Man talk at SIGGRAPH, as two new composite analysis methods leapt to the forefront to improve quality — and shockingly, almost no one noticed.

John Dykstra, the visual effects supervisor, sat at the front of the theater at SIGGRAPH talking about the work Sony Imageworks did for the first Spider-Man movie. He led several discussions on character rigging, virtual cameras, web dynamics, and digital New York — all fascinating. Giant teams of artists making onscreen magic, which properly defined the parameters of a superhero film. However, amongst all the intricate work, finely detailed models, complex BRDF samples, and virtual environments, a tiny section caught my eye: two insignificant image processing techniques, normally of limited use straight out of the box, changed how I composite to this day.

Why Would I Ever Use This Filter?

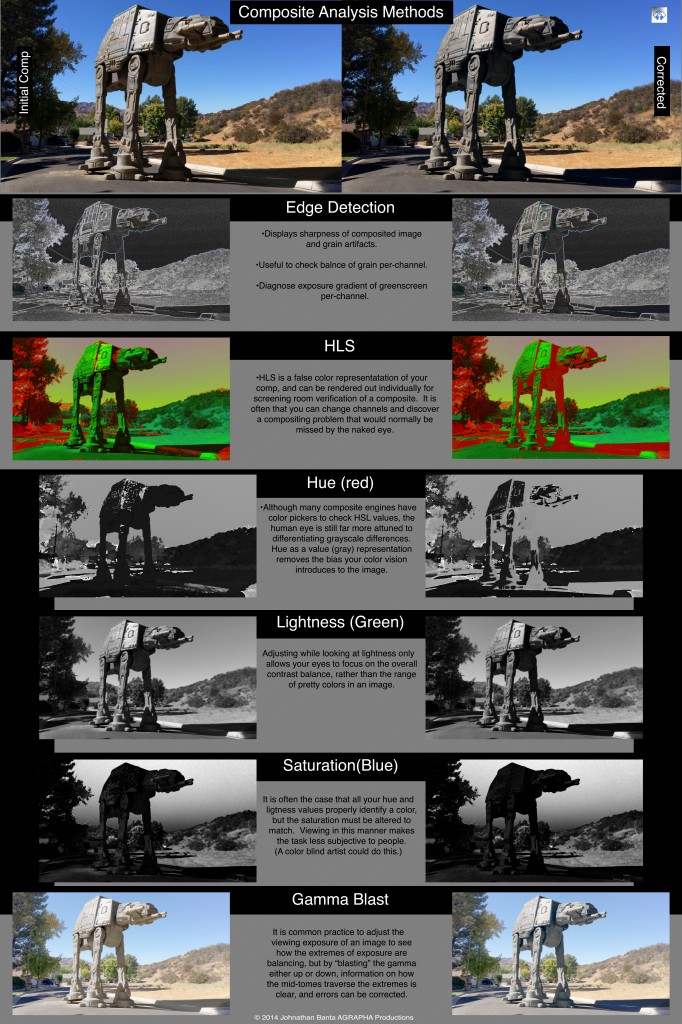

John sat casually talking, relaxed (as most Academy Award Winners are), and an image of Spidey under a simple edge-detection filter popped up on the large projection screen behind him. Anyone who has gone through the list of built-in Photoshop filters has tried this one, or anyone who has an app for their phone that turns an image into a sketch has seen it, and for the most part never gave it another glance. Generally, it has little use for most users — since it does not create a photo-real effect —but during the compositing stage of Spider-Man this filter was used to analyze the sharpness of the image, and see if the CGI edges were properly matching the real edges of objects in the photography.

fxGuide noticed the use of the filter, and summed up Dykstra’s description in this way:

“They did what they called a “single pixel pass” which was black with some fine coloured lines and looked like an edge detection filter in photoshop. They used this to detect detail that was too sharp – too CG – and created a matte from the single pixel pass to soften only those details that were too sharp.”

The sleepy, “too much math” haze that SIGGRAPH occasionally produces quickly fled from my mind. Something useful that does not require a degree in computer programming to implement in an existing pipeline is always an energy starter for me. The idea of looking at something through “new eyes,” as it were, to divine what you were seeing was intriguing.

Attention to detail is typical in the screening room of a VFX company, it’s why they have the screenings, but it’s always been limited to what any of the participants can see. It’s easy for an artist to convince themselves that they have properly adjusted the sharpness of a composited element, but when a render of this type is projected in a screening room for the whole team to tear apart, one can learn a little more about their bad habits. If you want to hear everyone’s opinion, just wait — they are about to share it. Again, it’s what screening rooms are for.

There is precedent to non-photo real observation of pictures in remote imaging that harkens all the way back to WWII; they used to use infrared images to spot tanks. Camouflage weapons of war rely on confusing the human visual system to hide in plain sight. Our brain, accustomed to certain ways of seeing, does not want to work hard to identify images. It’s lazy, and easy to trick when it encounters an image that roughly fits what our mind expects to see. However, when an image is presented to us in an unfamiliar way, the mind wakes up, and with our perception altered, details we did not previously identify stand-out. With enough exposure to these altered visuals, we retrain the brain to recognize what new details mean, and in the case of the tank hunters — there is a tank there.

An edge detection is essentially an analysis of the slope of an image (a simple 3×3 convolution filter). It is the surface normal of the UP vector, if this were a 3D model. Were the image a map of the Grand Canyon, it would inform us how steep each of the cliff edges were with greater intensity — and for you texture painters the edges where grass does not grow. (thats a texture painting hint).

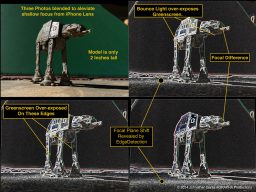

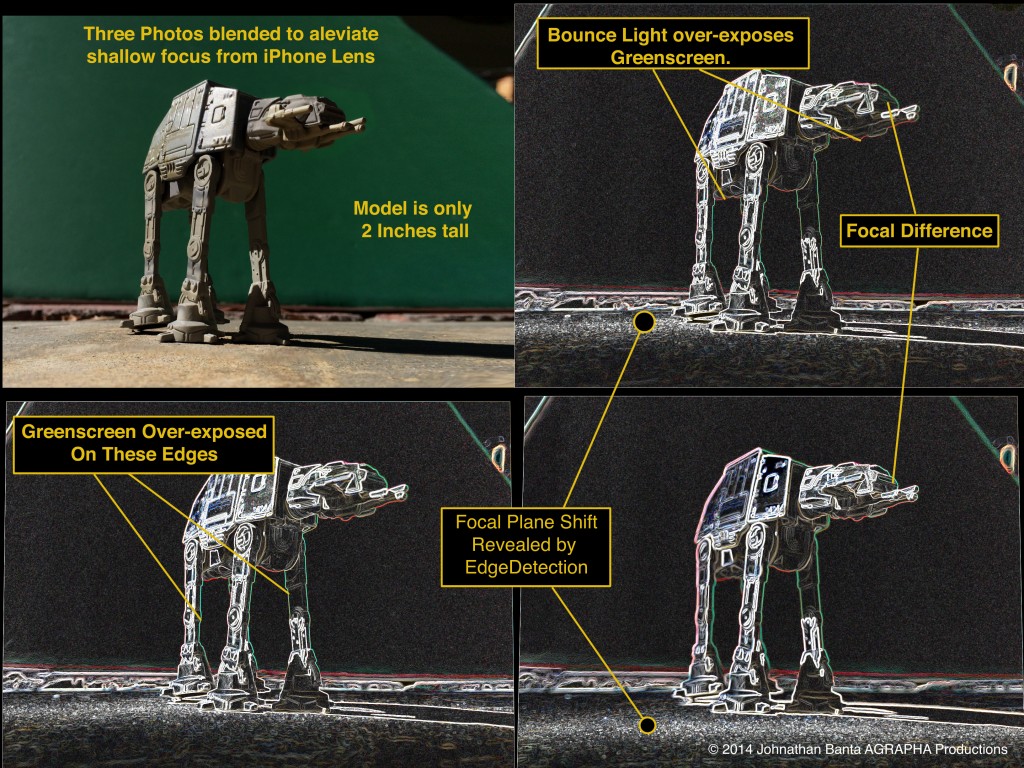

According to Dykstra, the filter was only used to check sharpness, as that was what concerned him at the time, but it contains a much larger range of information. Soft focus is emphasized, as well as the color fringe created by overexposed blue screen or greenscreen. It reveals any part of the image with high detail, including differences in film grain.

Film grain or sensor noise exists in most images, an unavoidable artifact, and it is unique to each recorded picture. When a synthesized item must seamlessly composite with a photographed one, the two must match perfectly or the illusion suffers. The grain must be replicated and balanced. Scanned grain images, or Fourier frequency analysis plug-ins are the most common solution to add grain back in. These methods recreate the structure of the grain, but do not always balance the levels of grain per channel, leaving it to trial and error for the artist. Edge detection reveals this per-channel variance and the adjustments can be fine tuned.

What a wealth of information! Edge sharpness, image softness, color bleed, grain matching, and slope prediction for texture mapping. Not bad for a little 3×3 convolution filter. For me, it is hard to quantify how much a simple effect like edge detection has changed the quality of my work over the years.

The Color You Never Saw

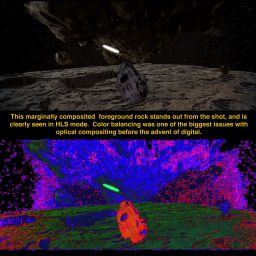

Our eyes do not perceive color well. This is evidenced by the art of camouflage: introduce a few painted shadows on something green, and it is a plant to all observers not in-the-know. Again, this refers back to the infrared film use from the war, however visual effects rarely uses infrared systems, but a similar methodology of changing color spaces provides similar results —letting compositors better notice the color balance between their elements. I must again credit the VFX team on Spider-man and their 2002 presentation for introducing me to this concept early in my career. Not only did John Dykstra casually throw out the Find Edges method (thanks again), but also the use of HLS (or HVS) color space to check the balance of elements in a composite, emphasizing any anomalies.

Spider-Man has a specific red and blue suit, which must look like it exists in the scene photographed — easy to do with a stunt man (since he was there on the day), but Sony was responsible for adding a CGI Spidey as well, sometimes directly extending the stunt mans’ performance. The color of the lights, or ground reflecting around the subject matter influences that color. Computer generated super heroes can be anything the artist makes, and that can break our suspension of disbelief, so it is one of many crucial components to a good composite that the color match. The VFX team on the show realized that, and turned to the HLS viewing mode to check the integrity of the CGI Spidey, and how well he gelled with his surroundings.

The entire image is rendered and converted to the new color space — but presented in a standard RGB color image red = hue, green = luminance, and blue = saturation. One can look at a single channel alone, by displaying one of the colors through the digital projector in the screening room. In such manner one can quickly ascertain whether the dreaded Jumanji problem was there by looking at luminance. The exact hue of the costume was accurate when its grayscale value matched the grayscale values of the photographed reference in that same scene. But saturation is also a concern. The blue channel showed the saturation of the suit as a black and white intensity value, as opposed to a color. Our eyes are terrific at differentiating shades of gray, and that is easier to check the saturation with, as opposed to overriding the eyes ability to make the distinction with too much color. We as humans like more color because it is pretty, and are trained to balance the color of “flesh” by our television manuals — those skills do not necessarily translate to a good composite.

To quote Yoda: “you must unlearn what you have learned.”

Gamma blasting, edge detection (I call it grain check), and HLS viewing modes are now part of every composite I generate. Adding these last two extremely simple tricks gleaned from the SIGGRAPH conference talks has made me a better artist. There are new methods all the time that come up at the conference (with all of those smart people together) but the trick is how does it all apply to what you are doing? It is the little things that you make connections with, and twist around that make the biggest difference, and push the art and innovation.

Thanks Spider-Man!

AG