To the IMAX Moon and Beyond

Moon Flight Science!

On September 23, 2005, Magnificent Desolation: Walking on the Moon in 3D released on giant IMAX screens. It is a 4K stereo3D experience of the Moon landings, and speculative missions from the past and future. The film was honored with the first Visual Effects: Special Venue award by the Visual Effects Society in 2006. This multi-part article is based on a presentation about the visual effects at LA SIGGRAPH, the following June, however this article is an expansion of that text to emphasize an aspect that is unique to the production.

Part 1: Prep and Landing —Preparing for Stereo3D in 2005 and Beyond.

Part 2: Strolling on the Moon — Stereo3D Methodology and Innovations.

Part 3: Touchdown — Moon landing Simulation by John Knoll.

Part 4: Flight Time — The Lunar Flight Simulator of Paul Fjeld.

Magnificent Desolation: Lunar Landing Sequences

It is now vogue for visual effects movies to contribute to science. The movie Gravity is a clear example of super-computer simulation used for both science and entertainment. Ten years earlier the IMAX movie Magnificent Desolation, had a similar, but smaller journey depicting the landing of Apollo spacecraft on the Moon. Images created by John Knoll, Paul Fjeld (fee-YELL), and their associates.”

Paul Fjeld is an expert on the Lunar Module. He was an advisor to the HBO/Tom Hanks production of From the Earth to the Moon and helped write the script to the episode Spider, as well as on-set consultant for Magnificent Desolation. He contributes regularly to the Apollo Lunar Surface Journal, and restoration of NASA hardware on display at museums, including the Smithsonian. He programs 3-D computer graphics — including LMSim — an Apollo Lunar Module flight simulator. He also follows Chesley Bonnestel’s tradition of space art for NASA, inspired by his presence at Mission Control in Houston, which introduced him to the Apollo spacecraft, and their operation. ” (The LM simulator was inert, but CM simulator was live – he used them both)

While covering the Apollo 17 mission in Houston, I had the extreme good fortune to meet some of the Apollo instructors. I got to spend about 20 hours learning as much as I could about flying the spacecraft during overnight maintenance periods. When it was convenient, I would come at night and use a part of the simulator that they weren’t fixing for the next day’s run. If they needed a switch changed they’d call me, saving themselves the trouble of climbing up and into the capsule

All this experience in the physical LM simulator made Paul a knowledgable source about the Lunar hardware — but that can be a two-edged sword at times. He once gave an interview for a FOX television show called “Conspiracy Theory : Did We Land on the Moon.” hosted by Mitch Pileggi of X-Files fame. The television show’s tone is conspiratorial, saying the Moon landings were faked. Paul’s contribution is accurate, but seems to be used out of context. We will use this as an opportunity to set that record straight:

I am an avid revealer of the obviousness of the visual and historical proof of the Moon Landings and the easily disproved Hoax points, which I spent over an hour slaughtering on film for the Fox show – they used one 10 second clip. I can’t prove the reality of the landings by doing the simulation, but I can revel in the data set and what it proved of the genius and quality of engineering that got us there.

-Paul Fjeld

Interaction with the entertainment industry is often tainted by an entertaining, though occasionally false premise. The producers of Magnificent Desolation wanted to do the opposite, and their funding allowed some real science to take place. The use of simulation in Magnificent Desolation is another step toward science and entertainment melding together in an “archeological dig” through NASA history.

What follows is a “slice of time” during the production of the visual effects, where all the problems were not solved. It displays the evolution of the simulation, and the constant re-analysis of the data to find the result. Not only does the simulation create visuals for the IMAX screen, it may also reveal the challenges the Astronauts faced in real-time, and their impact on future planetary missions.

Part 4: Flight Time

This article is extracted from email interviews with the creator of the Magnificent Desolation flight simulator, and contains many terms understood by rocket scientists. Little effort is made to “dumb this down” as the tool is a specific scientific instrument applied to visual effects.

Paul Fjeld (written during the middle of production):

“I started with the assumption that the Grumman Flight Performance Evaluation Report graphs of the landing data sets were accurate. I thought I could use the flown Pitch, Roll, Yaw attitudes, as reported every 2 seconds, along with the Hdot in P66 (semi-manual final landing phase program) and Throttle in P64 (semi-automatic approach phase program), to simulate the trajectory using my LMSim program which has in it the basic Guidance System Operations Plans algorithms. I do not simulate (yet) the unmodelled bias torques and forces, including propellant slosh and offset engine thrust among others.”

“I measured and entered by hand all the data points and graphed them to compare with what I had used, and sent them. This was the confirmed raw data. I then used a simple (De Castlejau) bezier curve formula to plot a smooth path through the points, then rectified the time points by linear interpolating the curve to sit on the 1/24th of a second divisions.”

“Using that expanded data set I drove it through my simulation to see where I ended up, first in P66 by modifying my code to use the high-rate, actual rate of descent to calculate a throttle setting, instead of the 1/sec rate of the normal simulation. I got the descent rate fine and an altitude number of around 370 feet at the start of that last velocity nulling phase. Setting the initial velocities took some work – just iterating – but I always ended up very left and with a residual forward velocity of about 3 fps.”

“So I tried the P64, Approach Phase data set. It was worse. I began to suspect my own (now more than 10yr old [in 2005]) code, and began stripping away and working up, from first principles, the formulae for everything, including attitude. I pushed the data sets through this new, “better” and confirmed correct code, but the generated trajectory still had that big left hook instead of a right one.

“Then I cobbled parts of my old sim to take automated inputs at specific times during P64 and recreated the redesignations that Falcon did. It flew normally, but the resulting attitude profile did not include as big a roll left. Finally, it dawned on me that the guidance dispersions they had to correct for during the descent might have meant that the inertial orientation system matrix was cock-eyed in roll!”

“On the train to New York I began playing with different combinations of roll-biases including factors for negative and positive pitch and roll that gave me what I wanted. I decided that attitude angles less than 10 degrees different should be okay and let the physics drive the motion, rather than move where I wanted with non-corresponding attitudes. It looked reasonable and I ‘osculated’ the P64 end conditions with my guessed-at start conditions in P66.”

“[fig.1] shows the flight attitude data in black and my final pitch, roll, yaw in blue, red, green respectively. You can see how much the roll (middle) is off at the beginning (maybe 9 degrees) but I fillet it to a 1/3 of true flight value when P66 starts (102 seconds). There is also a bit of fudging in pitch at the end – not as much pitch down to keep the forward motion at 1.5 fps as it was. Dave [Scott] still backs up about 70 feet into that crater…”

“The jets were also harder than I expected, but I shouldn’t have been surprised. The real attitude curves should be a blend of the small, damped, back and forth of slosh of the fuel (though much less than Apollo 11 & 12) and the constant jet accelerations at the boundaries of constant attitude rates. The smoothing of the 2 second data that I did introduced artifacts which, when I plot the rate and acceleration curves shows a ragged look to the rates and a variableness to the accelerations [see fig. 1 and fig. 2]. I combined the Pitch and Roll values through a 45 degree skewed U,V axis rotation matrix, as in the real machine and accumulated the jet firings through a simple filter. [fig. 2] shows the U channel acceleration exaggerated by 5 against the Pitch and Roll values. This skewing of Pitch and Roll control into U/V prevents a conflict when Roll and Pitch are commanded at the same time — where you might get two jets firing opposite of one another. I ended up within 90 percent of the jet on-times.”

Conclusion

“The trajectories are within 80-90 percent accurate. I was not able to get much better than that, as I don’t know which of the data reports to believe. There is a definite conflict between the Grumman and NASA reports and I am going to find out what was the real deal once I can get out to the Houston archive.”

“Match-move vs Trajectory analysis: I think 3D tracking is better for a real ground truth, especially if one can find a way to decouple the camera translations caused by rotation about the LM CG from real translations (really only a problem when you get close to the surface). Once you have generated decent position and rotation data I think that a sim could refine it quite nicely. Generating the derivatives of attitude angle for the jet firing times is definitely the way to go! ( a method used by John Knoll’s ILM team on Star Trek: First Contact for the escape pods)”

“I should mention that, because all of this was fresh in my head, I was able to make a decent contribution to the big Northrop Grumman/Boeing/NASA technical meeting on the new CEV in New York on Friday. The NASA guy wanted to know about pin-point landing and if there was something we could take from Apollo. Boy did he hear from me!”

Afterward

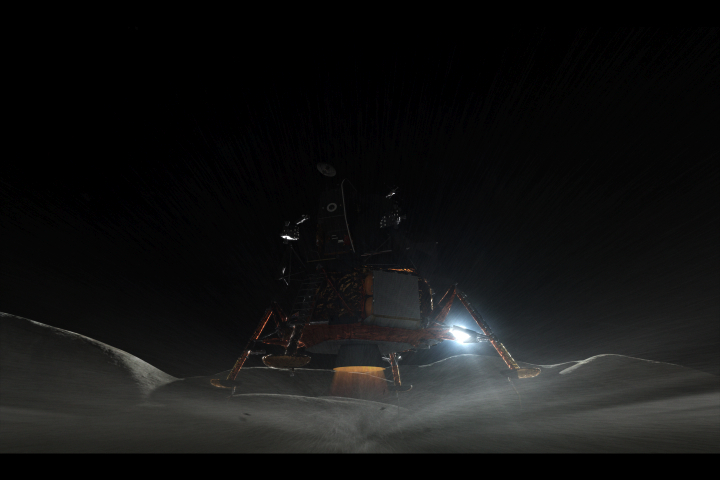

The partnership with John Knoll for Magnificent Desolation paid off with other product over the years. John Knoll built the illustration above with some technical input from Paul, and they contributed to the Google Moon explorer. As Paul has said online:

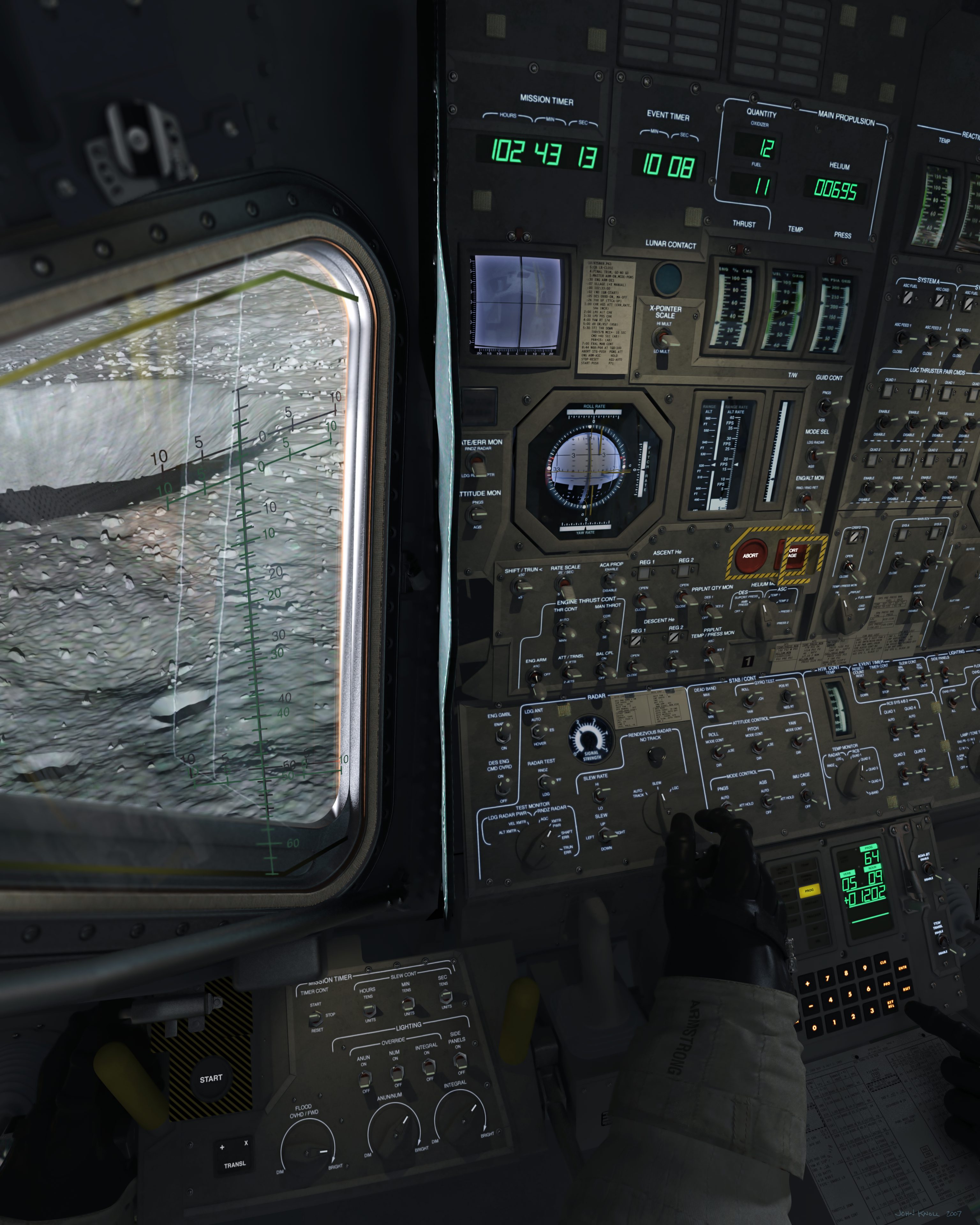

“…select the Guided Tours directory and then Apollo 11. Andy Chaikin narrates with Buzz the landing and lunar walks. The trajectory and inside cockpit view were generated by John Knoll (Oscar winning Visual Effects Supervisor) and are extremely accurate. I’ve superimposed the LRO image of the landing area (semi transparently) and it looks really cool. The better data will make it so we can just hang out on the surface!”

If you want to play with the controls of a Lunar Module, someone more recently has built an online simulator as a toy (this is not Fjeld). Here is a video of that modern software in action, it is also inspired by the fantastic accomplishment of so long ago, but is not from the IMAX film, but you can compare it to the test renders above (it seems that they may have seen the film and rotate their camera to rebuild those shots). If only the data used for this high-quality toy were available in 2005!

If you wish to build your own simulator:

“The source code [to the Lunar module computer] has been transcribed or otherwise adapted from digitized images of a hardcopy from the MIT Museum. The digitization was performed by Paul Fjeld.”

This concludes the coverage on Magnificent Desolation.

Thanks To:

Tom Hanks

IMAX Coproration

John Knoll

Paul Fjeld

LiveWire Productions

All the crew at SFD, and our families

SIGGRAPH

Visual Effects Society

All of you for reading along

The Astronauts who went on location for us.

Shoot I almost didn’t come because I thought it was a real nutball moon landing hoax site! Glad I did, that interior shot of the LM instrument panel in the dark is glorious! Thanks!